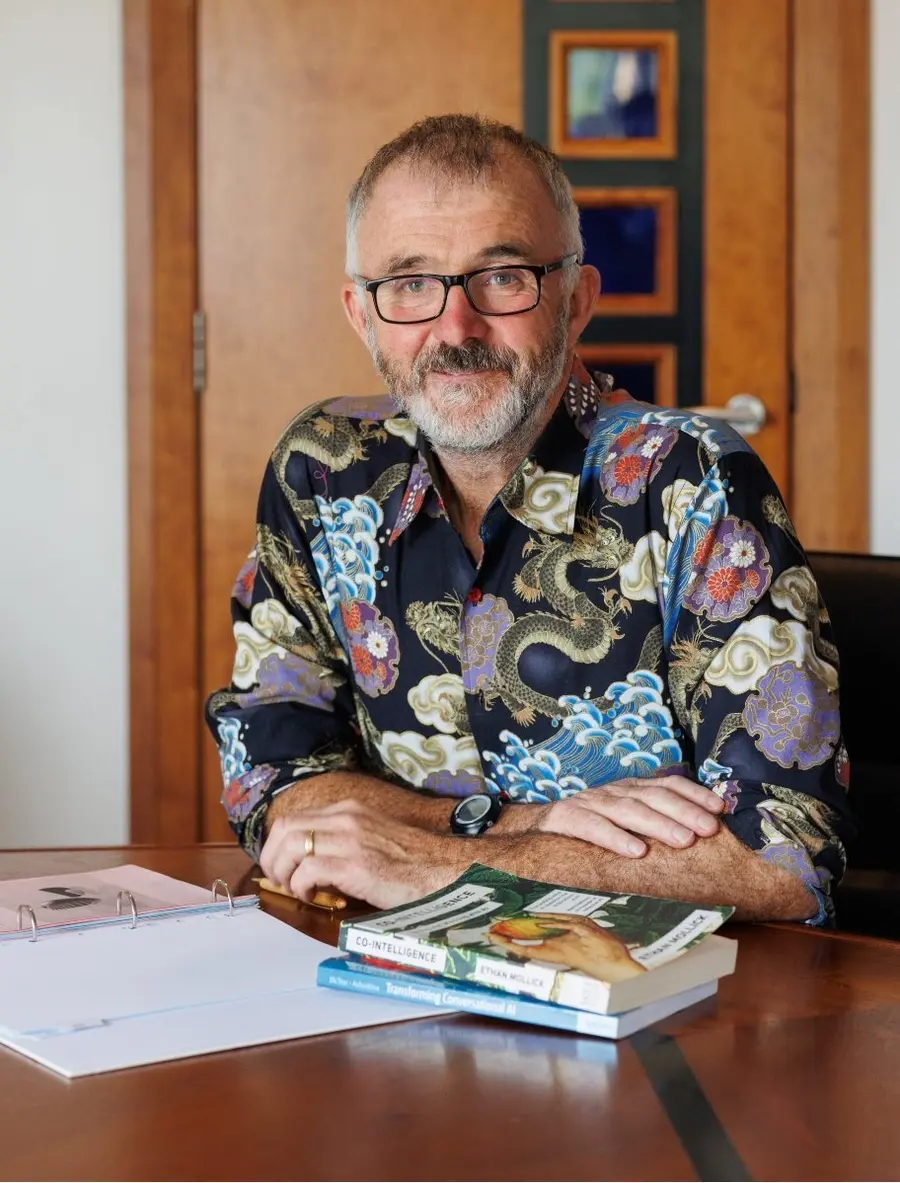

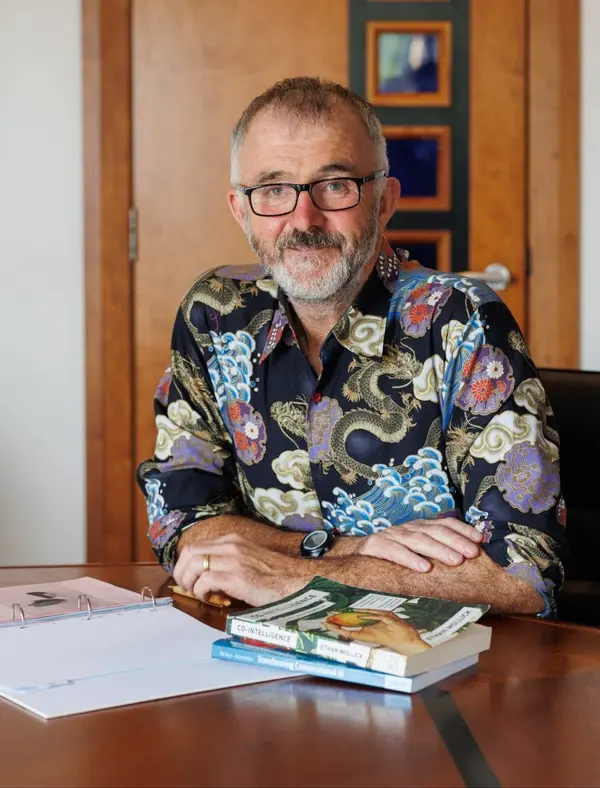

Barry Phillips (CEO) BEM founded Legal Island in 1998. He is a qualified barrister, trainer, coach and meditator and a regular speaker both here and abroad. He also volunteers as mentor to aspiring law students on the Migrant Leaders Programme.

Barry has trained hundreds of HR Professionals on how to use GenAI in the workplace and is author of the book “ChatGPT in HR – A Practical Guide for Employers and HR Professionals”

Barry is an Ironman and lists Russian language and wild camping as his favourite pastimes

This week Barry Phillips argues that it may be time to relax the rules relating to use of ChatGPT in the workplace.

Transcript:

Hello Humans!

And welcome to the weekly podcast that breaks down critical AI developments for HR professionals—in five minutes or less. My name is Barry Phillips.

Let's talk about a risk that many organisations still don't fully understand: data training in generative AI tools.

Here's something that might surprise you. Until April 2023—five months after ChatGPT launched—OpenAI didn't offer users a way to opt out of data training. Every prompt, every question, every piece of information you typed was automatically used to train future models. That included personal data, commercially sensitive information, confidential HR records—all potentially incorporated into the system and, theoretically, capable of appearing in responses to other users anywhere in the world.

When OpenAI finally introduced the opt-out feature in April 2023, they did so quietly. Almost embarrassingly so. No fanfare, no announcements—just a small toggle buried in the settings.

Since then, organisations have scrambled to address this risk. At Legal Island, we've developed what we call the ABC Rule for AI safety:

A – Avoid inputting personal or commercially sensitive data

B – Be sure to turn off the training function

C – Check for accuracy every time

We've trained hundreds of employees on this approach. But here's the problem: it relies entirely on human memory and diligence. What happens when someone forgets to toggle that switch? What if they ignore the ABC rule in a moment of convenience?

OpenAI's response came in September with their Business account—£25 per month, with training automatically turned off by default. A step forward, certainly. Combined with end-to-end encryption that even MI5 couldn't crack and comprehensive ABC training, you'd think that would be enough reassurance.

For some organisations, it is. But for many others—particularly in the public sector—it simply doesn't go far enough. The stakes are too high. The risks too unpredictable.

But here's the uncomfortable truth: while the public sector waits for perfect security that may never come, the private sector is racing ahead. They're automating recruitment, streamlining HR processes, and gaining competitive advantages that grow larger every day. At some point, caution becomes paralysis. Perhaps it's time for public sector regulators to ask themselves: are we protecting data, or are we just preventing progress?

The goalposts have moved significantly since 2023. Maybe our risk tolerance should move with them?

That's it for this week. Until next time bye for now.