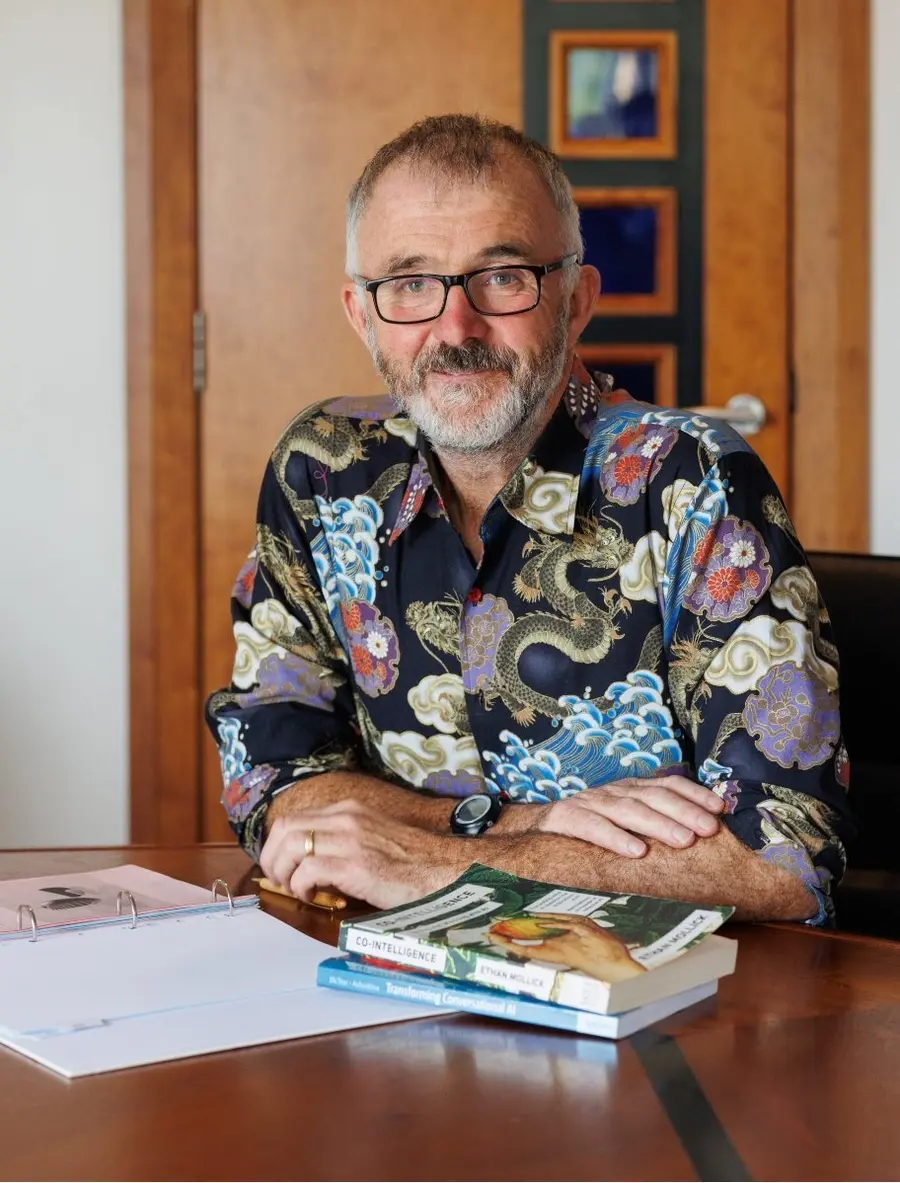

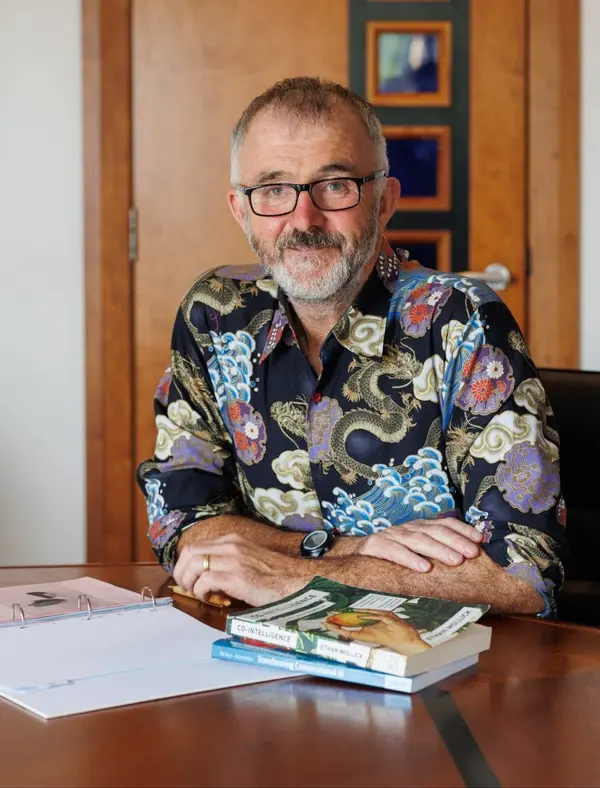

Barry Phillips (CEO) BEM founded Legal Island in 1998. He is a qualified barrister, trainer, coach and meditator and a regular speaker both here and abroad. He also volunteers as mentor to aspiring law students on the Migrant Leaders Programme.

Barry has trained hundreds of HR Professionals on how to use GenAI in the workplace and is author of the book “ChatGPT in HR – A Practical Guide for Employers and HR Professionals”

Barry is an Ironman and lists Russian language and wild camping as his favourite pastimes

This week Barry Phillips offers his solution to the growing problem of how to regulate employee use of GenAI that haven’t been whitelisted.

Transcript:

Hello Humans!

And welcome to the weekly podcast that aims to summarise in five minutes or less a key AI development relevant to the world of HR. My name is Barry Phillips

Today, we’re getting into a tricky, uncomfortable, but absolutely essential conversation: the dark use of AI in the workplace.

Now, when we talk about AI at work, it’s usually framed in bright colours—productivity, innovation, automation, efficiency. But there’s a shadow side. A quiet, unspoken reality that many organisations are only just beginning to confront.

And that’s this:

What happens when employees use AI tools that the organisation hasn’t approved—or worse, has specifically told them not to use?

It’s a real conundrum.

On the one hand, companies set rules, policies, and guardrails. They whitelist certain tools, they ban others, and they communicate the risks. But on the other hand… employees are curious, resourceful, and increasingly AI literate. They experiment. They try things at home. They test tools in their own time—and then, naturally, some of that thinking spills into the workplace.

So how do you regulate something you’ve already told people they shouldn’t be using… when they’re using it anyway?

Let’s be honest: you can’t police every search, every prompt, every moment of inspiration that comes from tools outside the corporate whitelist. And trying to clamp down harder often just drives behaviour underground.

So maybe—just maybe—the answer lies somewhere else.

Picture this:

You’re delivering your next training session on your organisation’s approved, whitelisted AI tools. Everyone is there, everyone is engaged, everyone is learning.

And then you add a small, subtle extra section. Something like:

“For those of you exploring other generative AI tools in your own time… here’s a quick piece of advice that might help you stay safe, compliant, and informed.Turn off the training function first and follow the rule never to drop into the prompt bar personal or commercially sensitive data”. And then explain why.

You’re not validating the behaviour.

You’re not endorsing the behaviour.

But you are acknowledging reality—and equipping people with better awareness, which leads to better decisions.

It’s harm reduction for AI use.

It’s responsible. It’s pragmatic.

And frankly, it might be the only approach that actually works in the long run.

Because here’s the bigger truth:

This dilemma isn’t going away anytime soon.

As AI models continue to diverge in capability—some powerful, some experimental, some wildly ahead of the curve—the temptation for employees to explore will only grow stronger.

The gap between what’s whitelisted and what’s possible is widening.

And as that gap grows, organisations will have to rethink not just their policies, but their entire philosophy around AI literacy, experimentation, and trust.

We’re entering a future where saying “don’t use that” might simply not be enough.

Thanks for joining me today. If this sparked a thought—or a concern—feel free to share it. Because the conversation around AI in the workplace is just beginning… and we’re all going to need to be part of it.

Until next week, bye for now.