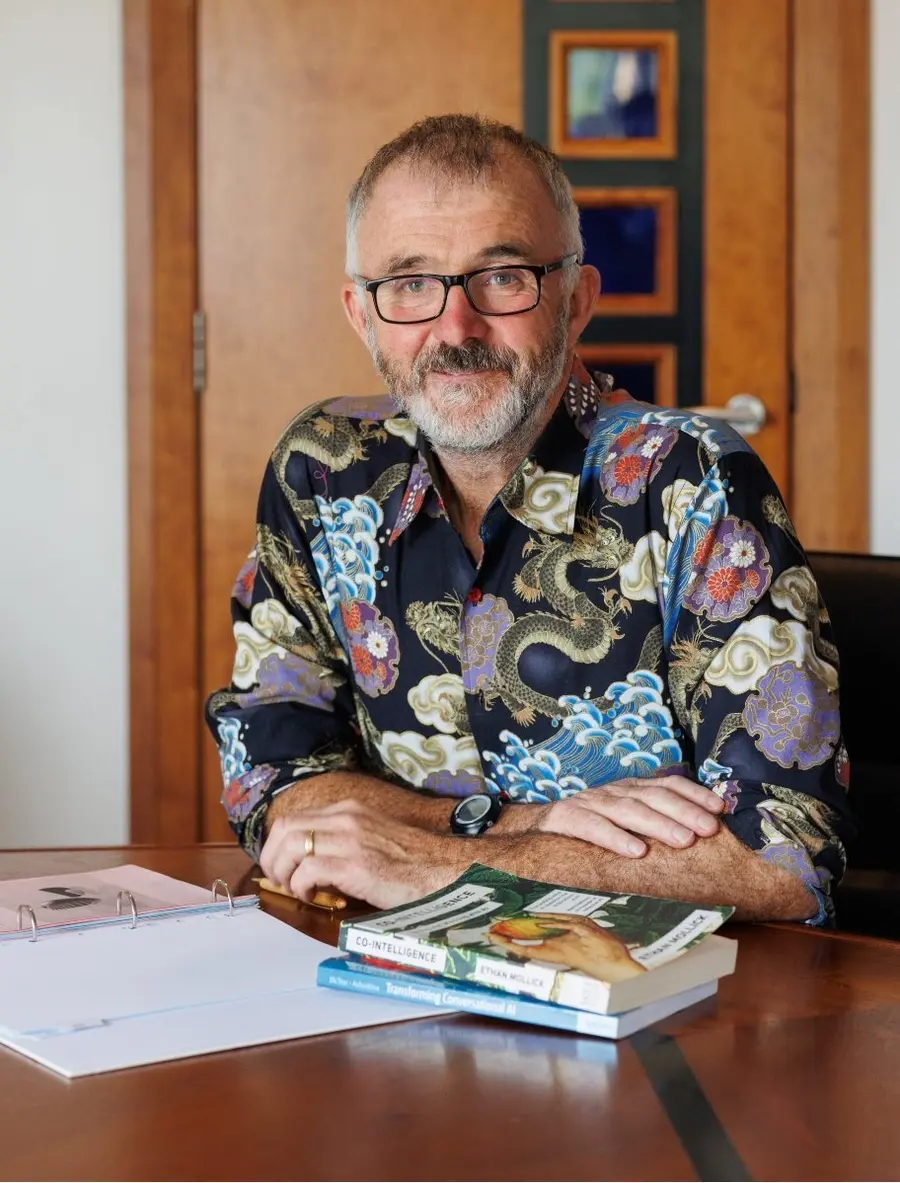

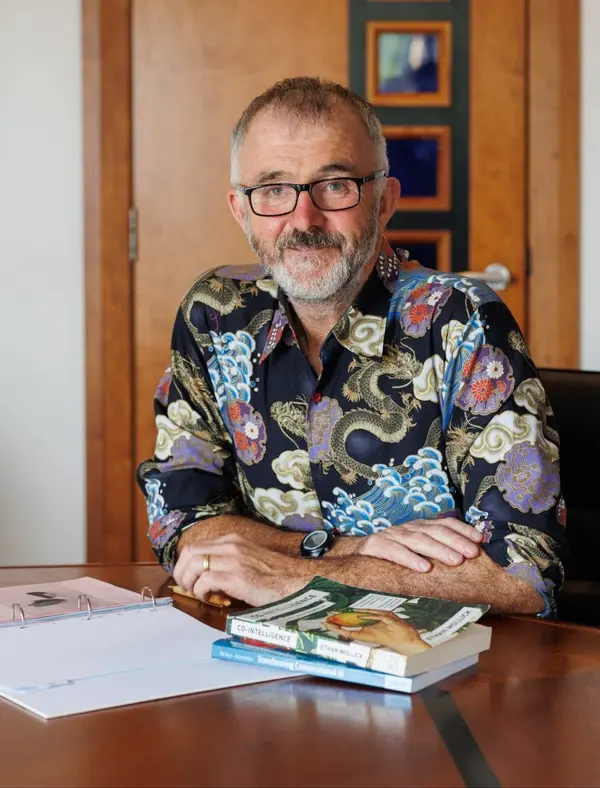

Barry Phillips (CEO) BEM founded Legal Island in 1998. He is a qualified barrister, trainer, coach and meditator and a regular speaker both here and abroad. He also volunteers as mentor to aspiring law students on the Migrant Leaders Programme.

Barry has trained hundreds of HR Professionals on how to use GenAI in the workplace and is author of the book “ChatGPT in HR – A Practical Guide for Employers and HR Professionals”

Barry is an Ironman and lists Russian language and wild camping as his favourite pastimes

This week, Barry Phillips risks a single prediction for workplace AI and hopes he's wrong for this year at least.

Transcript:

Hello Humans! Happy New Year!

And welcome to the podcast that aims to summarise in five minutes or less each week an important development in AI relevant to the world of work. My name is Barry Phillips.

It's the beginning of 2026—that magical time when everyone wants to hear bold predictions about the year ahead. But honestly, who in their right mind would stick their neck out in a world this unpredictable? Especially when everything we say gets recorded, archived, and thrown back in our faces for eternity.

Well, me apparently. I'm about to do exactly that, and risk joining the hall of fame—or should I say hall of shame—alongside legends like Dr. Dionysius Lardner and Thomas Watson.

Let me introduce you. Dr. Lardner was an Irish science writer and lecturer at University College London back in the 1830s. He publicly warned that high-speed rail travel could be dangerous to human health, even fatal. And he wasn't some lone crank—actual physicians and scientists of the era genuinely believed the human body simply couldn't tolerate such velocity and there was a danger that passengers wouldn’t be able to breathe at such speeds. Imagine.

Then there's Thomas J. Watson Sr., the chairman of IBM. In 1943, this titan of business famously declared: "I think there is a world market for maybe five computers." Five computers!

So here I go, adding my name to this illustrious list.

My prediction for 2026: This is the year organisations will finally take AI data security seriously—but only because they'll be forced to. By year's end, at least one major data protection regulator will issue a significant fine directly tied to AI misuse. And when I say "finally," I mean it. We surveyed hundreds of employers at the end of last year, and the majority admitted their AI literacy training—including safe AI use—was either poor or non-existent.

Right now, it's still the Wild West out there. And for those of us who were around in the 1980s when the internet first invaded the workplace, this should feel painfully familiar.

Remember? The internet seemed to appear out of nowhere, catching data protection officers and HR professionals completely off guard. Employers were slow to regulate it. They only got serious when employees started misusing it—playing fast and loose with company data or wasting time online. How many of us remember frantically closing our browser when the boss walked past while we were scrolling through Friends Reunited or checking our personal Hotmail? Novelty sites like Jennicam, Usenet, Myspace—they captured our attention but contributed very little to the idea of productive work.

So here's how I think my prediction plays out: The data breach will happen when a poorly trained employee dumps a mountain of personal data into an LLM. The model will happily gobble it all up—training function switched on—and then reproduce that sensitive information elsewhere, over and over, to countless users.

There you have it. Crystal clear.

Let's just hope, for everyone's sake, that I'm as spectacularly wrong as Dr. Lardner and Thomas Watson.

Until next week.

Bye for now.

AI Literacy Skills at Work: Safe, Ethical and Effective Use

AI Literacy Skills at Work: Safe, Ethical and Effective Use